DOST: A Distributed Object Segmentation Tool

DOST: A Distributed Object Segmentation Tool

M.S. Farid, M. Lucenteforte, M. Grangetto, "DOST: A Distributed Object Segmentation Tool," Multimedia Tools and Applications, Dec. 2017

Abstract: This paper presents a novel distributed object segmentation framework that allows one to extract potentially large coherent objects from digital images. The proposed approach requires minimum user supervision and permits to segment the objects accurately. It works in three steps starting with the user input in form of few mouse clicks on the target object. First, based on user input, the statistical characteristics of the target distributed object are modeled with Gaussian mixture model. This model serves as the primary segmentation of the object. In the second step, the segmentation result is refined by performing connected component analysis to reduce false positives. In the final step the resulting segmentation map is dilated to select the neighboring pixels that are potentially incorrectly classified; this allows us to recast the segmentation as a graph partitioning problem that can be solved using the well-known graph cut technique. Extensive experiments have been carried out on heterogeneous images to test the accuracy of the proposed method for the segmentation of various types of distributed objects.

Examples of application of proposed technique in remote sensing to segment roads and rivers from aerial images are also presented. The visual and objective evaluation and comparison with the existing techniques show that the proposed tool can deliver optimal performance when applied to tough object segmentation tasks.

Visual comparison of the results achieved by the proposed technique and the compared methods

In the following, we report the results achived by the proposed technique and compared methods on the test dataset comprising 8 images.

Subfigures order used in the following examples:

| (a) | (b) | (c) |

| (d) | (e) | (f) |

|

|

|

|

|

|

Fig. 1. Visual comparison of segmentation results of proposed tool and the compared methods on 'chimpanzee' test image. (a) Input image, (b) ground truth segmentation, (c) results of [60], (d) results of [15], (e) results of [18], and (f) our results.

|

||

|

|

|

|

|

|

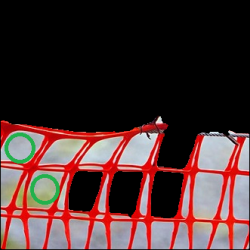

Fig. 2. Visual comparison of segmentation results of proposed tool and the compared methods on 'Sparrow' test image. (a) Input image, (b) ground truth segmentation, (c) results of [60], (d) results of [15], (e) results of [18], and (f) our results.

|

||

|

|

|

|

|

|

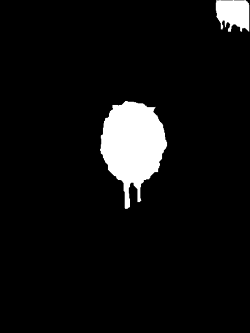

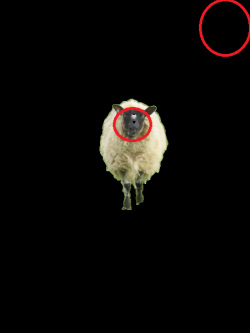

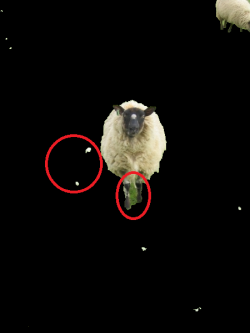

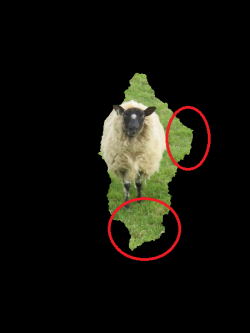

Fig. 3. Visual comparison of segmentation results of proposed tool and the compared methods on 'Sheep' test image. (a) Input image, (b) ground truth segmentation, (c) results of [60], (d) results of [15], (e) results of [18], and (f) our results.

|

||

|

|

|

|

|

|

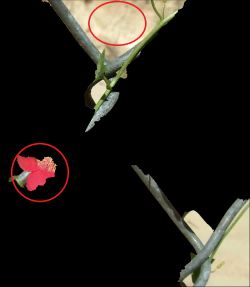

Fig. 4. Visual comparison of segmentation results of proposed tool and the compared methods on 'Snapdragon' test image. (a) Input image, (b) ground truth segmentation, (c) results of [60], (d) results of [15], (e) results of [18], and (f) our results.

|

||

|

|

|

|

|

|

Fig. 5. Visual comparison of segmentation results of proposed tool and the compared methods on 'Park' test image. (a) Input image, (b) ground truth segmentation, (c) results of [60], (d) results of [15], (e) results of [18], and (f) our results.

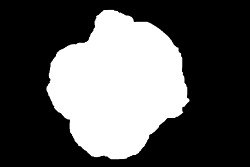

|

||

|

|

|

|

|

|

Fig. 6. Visual comparison of segmentation results of proposed tool and the compared methods on 'Rose' test image. (a) Input image, (b) ground truth segmentation, (c) results of [60], (d) results of [15], (e) results of [18], and (f) our results.

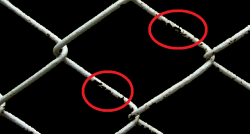

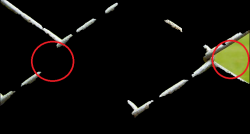

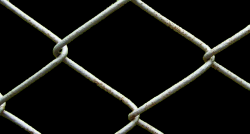

|

||

|

|

|

.png) |

|

|

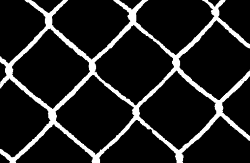

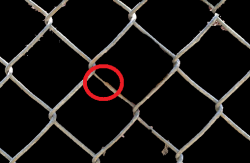

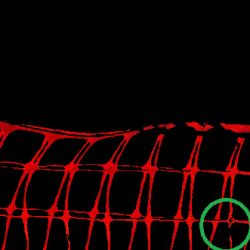

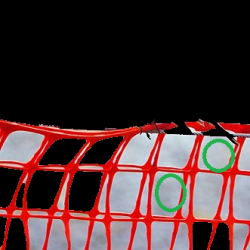

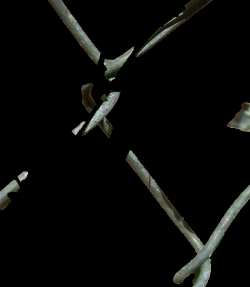

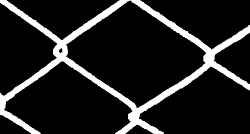

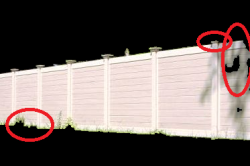

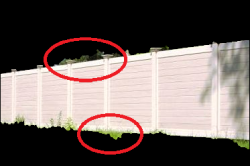

Fig. 7. Visual comparison of segmentation results of proposed tool and the compared methods on 'Wall' test image. (a) Input image, (b) ground truth segmentation, (c) results of [60], (d) results of [15], (e) results of [18], and (f) our results.

|

||

|

|

|

|

|

|

| Fig. 8. Visual comparison of segmentation results of proposed tool and the compared methods on 'Bear' test image. (a) Input image, (b) ground truth segmentation, (c) results of [60], (d) results of [15], (e) results of [18], and (f) our results. | ||

For more results and comparison with other techniques, please read the paper.

Last updated: October 19, 2017